The final part of a 14 hour marathon. Starting with a question that is very important to me and not least @MaryMyatt.

Q16. Does stake level matter?

There is no significant difference in testing benefits between high-stakes (g = 0.441) and low-stakes (g = 0.477) quizzes. Stake level plays little moderating role in the classroom testing effect. Both high and low stake quizzes benefiting. Yang et al (2021) go on to state:

“Hence, it is difficult to make a clear prediction about whether increasing the stake-level of class quizzes will boost or impair learning.”

Yang et al (2021)

With “…even low-stake quizzes can reliably promote learning.” (p20)

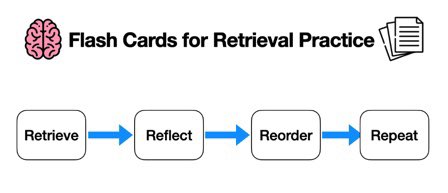

Perhaps this is where we need the rubber to hit the road. Now the post image makes sense – right?

I professionally, wholeheartedly believe, that success underpins motivation in the classroom and not the other way around. Hence, with CRM we have adopted low-stakes protocol or routines. We are as interested in learner agency, exposure, learner confidence, corrective feedback, meta-cognitive judgements and self-regulation, sometimes referred to as the in-direct effects as we are the direct effects. Retrieval practice and Successive Relearning the learner makes the correct retrieval

Nevertheless, I accept that can not hide from the fact that there are points in a learners academic career, where tests will be high-stakes. Good news – regular retrieval practice reduces test anxiety – See Agarwal et al. (2014) and Sullivan (2017).

Second, there is one component that may be even more important than what is at stake and that is, the learners ability to accurately identify their own level of attainment / performance. That this knowledge efficiently directing future actions. That is a separate post all together.

Q17. Should students take class quizzes independently or collaboratively?

Even though the results show that there is no significant difference in testing benefits between collaborative (g = 0.653) and independent (g = 0.490) quizzes this very question perked my interest. No firm conclusions can be drawn however, this is a very interesting line of enquiry, not one I have thought about before.

Q18. What research characteristics modulate the effect size of test enhanced learning?

If I am honest, I found this question the most difficult to discern and apply. Although I could present the findings, I could not explain them. Whereas I can outline and suggest that “instructor matching” (i.e., whether the quizzed and un-quizzed students are taught by the same instructors) does not significantly modulate test enhanced learning (Same instructor: g = 0.459; Different instructors: g = 0.527).

Q19. What are the mechanisms underlying the classroom testing effect?

The research findings support the additional exposure (what I refer to consistently as Successive Relearning), transfer-appropriate processing, and motivation theories to account for the classroom testing effect.

What is not discussed is – how to optimise Successive Relearning episodes and the relative potency of covert and overt retrieval. The opportunities for sharing high quality content, Quality Assurance across and between classrooms, learning or testing metrics.

Done.