Having led a school and currently teaching full-time, I am immersed in a unique professional experience. Here is what has got a hold of my attention.

Aggregation is the friend of reliability

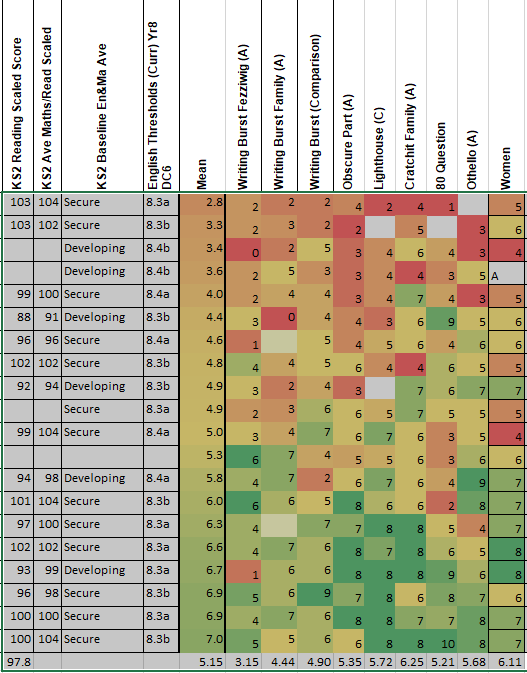

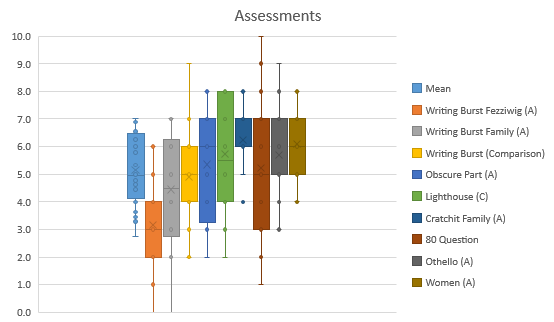

I am teaching a core subject. We have interim, in class, controlled assessments. The way I have set up my teaching, is to teach ten lessons meso-cycles, with each meso-cycle working towards a proscribe assessment. Working to a defined meso-cycle enables me to block out time and defer requests so that I can commit to marking student assessments and returned as efficiently as possible. (No hard evidence, it is my professional opinion, that a swift marking turn around benefits the feedback lesson that follows.) This particular meso-cycle coincides with the second whole-school “data drop”.

With nine assessed / controlled* pieces of student work per class in Year 9 (plus homework, a knowledge quiz, plus class interactions), I now have an aggregated grade and attainment profile for each student. My confidence in that aggregated grade is growing , after all “aggregation is the friend of reliability.”

Controlled* – a writing burst, an independent, under class supervision, writing task (40 mins). It is worth noting that “short classroom tests” are rarely precise enough to measure annual changes in pupil progress

It was this professional confidence swing from data drop 1 to now (data drop 2), that prompted a leadership itch. A itch that recognises the significant inequalities in curriculum allocation. A itch that was even more distracting given I am fully aware of the considerable work that goes into assessing and collating the data as a class teacher, the processes of collecting the data as a school leader and data administrator, and subsequent senior and middle leaders investment, analysis and action that follows a data collection, including chasing missing data, reporting to parents, celebration assemblies, commendations and so forth.

Leader itch?

Preceding the first data drop for Year 8 and 9, I was very cautious about what data I put into the system. I recognised that I was entering “student data” (to be shared with parents) on the back of just two controlled assessment points (with access to prior attainment and SATs scores) and with limited knowledge of the departments assessment framework I was applying (even as an experienced teacher). This was in contrast to the ‘Approach to Learning’ or “Effort Grade” data entry, where I felt I had a good measure of the students behaviour and effort during lessons – against set criteria.

This time around, now with nine attainment points and an aggregated mean, I am more confident I ‘know’ the student attainment profile (and to a lesser degree their individual strengths and next steps qualitatively).

Again, I recognise I can only afford this investment in class assessment because of the curriculum allocation given to English (5 x 50 per week).

Annual attainment tracking

The first data collection point in any school should be considered “noisy” at best. My reflections lead me to conclude that new teacher-student partnerships need time to bed-in, assessment to take place and attainment profiles time to build up. This point is amplified for single-year teacher-student settings, Key Stage 3, particularly Year 7 and new to GCSE classes.

Why would be collect central data before teachers know their students? What data is required to now your students. Prior attainment data, reading ages, SEND screening, possibly a standardise score of some kind. As a teacher, I would add a broad content pre-test (what have they learnt about X), an early assessment to communicate to students the importance of their learning, attention and retention in class / homework and to a) inform planning and b) to assess the quality of one’s own teaching. Then at least one or two (micro / in class) controlled assessment (often before the end of the first half-term), or at least preceding the first data entry. If this was in fact the assessment aim, is it even feasible in subjects other than English, Maths and Science (given their reduced curriculum allocation)? I would suggest not – so why would we expect data at the end of the first half term?

What about after half term? What about at the end of the first term? Let’s look at core, 3 lesson and 2 lessons a week scenarios.

- 5 x 50 min lessons per week, 60 lessons, (6 meso cycles) yes.

- 3 x 50 min lessons per week, 36 lessons, (3 meso cycles) – very low confidence.

- 2 x 50 min lessons per week, 24 lessons, (2 meso cycles) – unreliable.

I hope you can see why this has caused me to reflect?

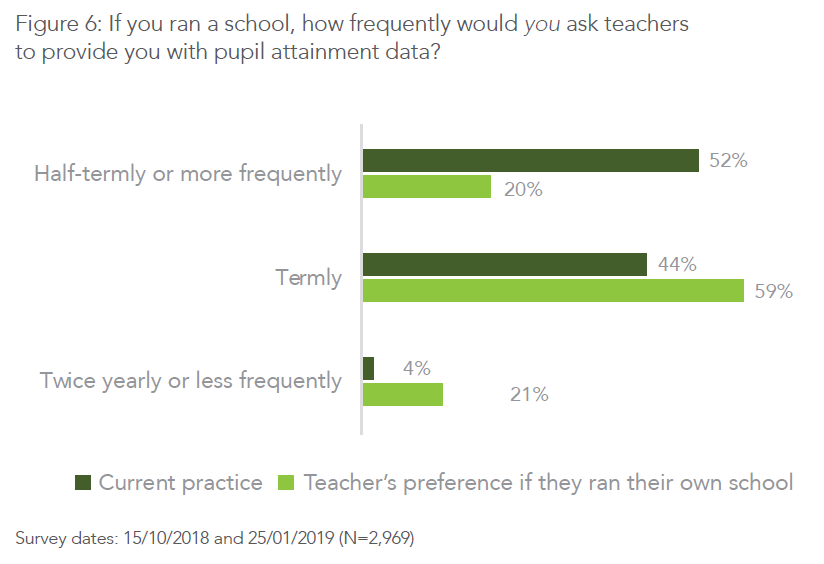

So what is the optimum data collection model? Having seen off the phrase “rapid progress” with the help of Teacher Workload Advisory Group and Ofsted have we seen off the “six data drops” a year model?

Not have more than two or three data collection points a year

Teacher Workload Advisory Group’s guidance

“Schools choosing to use more than two or three data collection points a year should have clear reasoning for what interpretations and actions are informed by the frequency of collection; the time taken to set assessments, collate, analyse and interpret the data; and the time taken to then act on the findings. If a school’s system for data collection is disproportionate, inefficient or unsustainable for staff, inspectors will reflect this in their reporting on the school.” (p 45).

Ofsted Education Inspection Framework (EIF)

The answer would appear not. Not for just over half of teachers responding via the Teacher Tapp survey. The other half of the workforce working to, and preferring, termly models, a small minority twice or less a year, the survey not showing or tacking into account sector or subject domains.

Itch – more like a rash

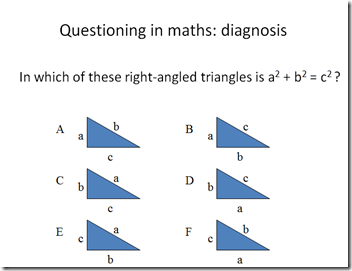

Before centrally collecting data – How secure are teachers in applying the assessment framework. I am thinking about teachers new to the school and new to the profession in particular? Has there been an opportunity to moderate teacher assessment? Has there been an opportunity to standardisation assessment across the classes? Unless the quality (validity and reliability) and purpose of the assessments is understood and related to the curriculum – what inferences can be drawn?

What information best informs school leadership? Year group leadership? Class leadership? What information do parents require?

Should curriculum ‘mark books’ be housed within the MIS promoting continual assessment, prompting coherent planning and shared intelligence? Possibly. Could an aggregated attainment grade be calculated within the MIS? Yes. Could this aggregated grade be ported to the data collection cycle and be more reliable than a single data-entry point? I expect it could – however I would ensure the data entries were editable, so that outliers can be adjusted (medical, absence). Could AI be trained to investigate the student profiles? Or at least sign post concerns – yes.

I know that assessment informs my teaching, it gives me confidence ahead of next weeks parent evening conversation. It has value to the students, it has value to me as a class teacher. I am not as confident as I once was, as to the value of termly data drops beyond core subjects. In fact I am not confident at all. I am not as confident as I once was in drawing conclusions between classes and less so between subjects as I once did as a school leader after each data drop.

Another itch – the quality and validity of the assessments as connected to the curriculum… that will have to wait.