For the past two years, within our Academy’s context, I have over-seen the move from a graded “Lesson Observations” schedule to a distributed graded Lesson Observations and Professional Development and Support” programme (LOPDS). When I arrived, all previous observations had been conducted by the VP with a responsibility for Teaching and Learning and her senior colleagues. Given that one of the few benefits of graded lesson observations was the opportunity to actually observe (and learn from) your colleagues, this was my first and immediate change. I decided that the very next cycle would be undertaken through the line management structure. Of course, a distributed model requires staff training and quality assurance, and luckily, ASCL would be coming to my rescue. More about the training, standardisation and moderation shortly. Next on the todo list, refreshing the form and regaining the trust of our staff. Lesson Observations were renamed LOPDS – Lesson Observations, Professional Development and Support. A mouthful, granted, but necessary,

The emphasis on the PDS (Professional Development and Support) component of the LOPDS process, was a conscious effort to direct focus away from the often crucifying grade and back to the teaching process. As much as anything it was a statement of intent. We were not throwing the baby out with the bath water, but we wanted to encourage teachers to observe one another, for PDS. I know, controversial. No, really, it was controversial, with a small number of staff suggesting we were manoeuvring Union guidelines of x number of observations, for x minutes, when we were just suggesting that teachers observing one another teach, was an excellent professional development opportunity. If I am honest, it was a leadership lesson – even though I know deep in my heart that peer observations and coaching is hugely valuable, changing organisational perceptions is a slow process. More moulding than carving.

Other than that, I believe our lesson observation practice reflected a relatively common approach to observing teachers’ teaching, at least in my experience and professional experience. Where teachers are formally observed three times a year (0.4% of their teaching), the only difference is perhaps that we accepted evidence from both LOPDS and PDS observations accepted as evidence towards for Performance Reviews. Of course, most recently, the importance of graded lessons was inflated with most Performance Related Pay decisions referencing lesson observation grades. Our view was that we wanted to encourage PDS observations and in our PRP documentation we used the phrase “a minimum of.” Staff could be observed as frequently as they could arrange, to evidence the teaching profile they were trying to demonstrate in their Performance Reviews – though I am not sure “seven driving tests” Gove, would have approved of this retake philosophy? The same Performance Reviews which often included a professional objective based on class FFT targets, of some flavour. Don’t push me.

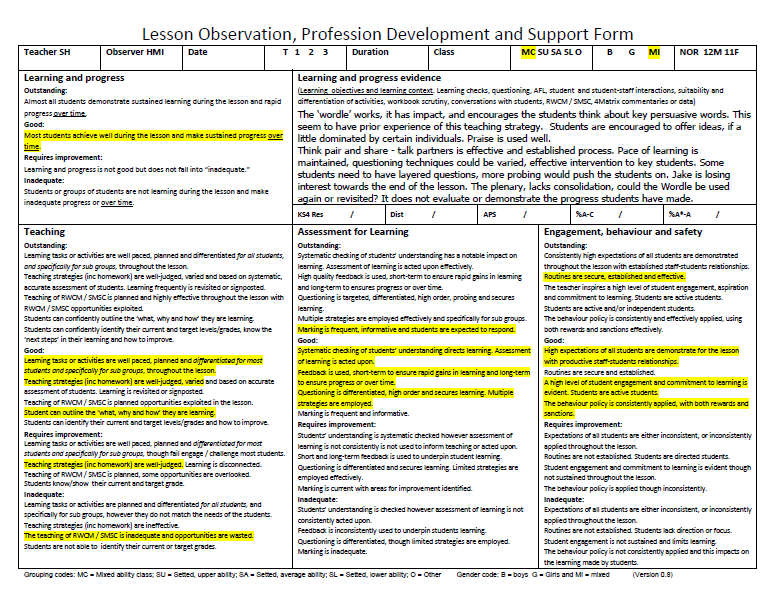

Right, back to the LOPDS form, process and analysis. Together, with our Curriculum Leaders we designed the first draft version of the LOPDS form, using the Ofsted handbook (scrutinising work and talking to pupils) and subject descriptions (unsearchable on the Ofsted website at the time, hidden?). Taking into account our assessment of the needs of the school at that time (eg, at that time a sincere drive to improve the marking dialogue between teacher and pupil) and a handful of shared/pilfered/Googled lesson observations forms. With our first draft mocked up we boldly ventured into the learning jungle and since there was no mauling, after a few minor revisions, I thought we were ready for a first release. Armed with a handsome pile of final draft LOPDS forms, two ASCL recorded lessons, feedback session and HMI evaluation videos, it was onto observer training and standardisation training.

On the big screen, Sarah Hancock’s, Year 9, middle to lower ability English lesson. On the desk, our virgin forms (0.8 – not even production ready) and her planning and class details. Ready to hand out, a modelled LOPDS form. We attentively watched observed and graded Sarah’s lesson. And even as I write this, I can’t help but imagine a gaggle of Donald Rumsfeld’s “unknown-unknowns” mischievously running between staff, tapping them on the shoulders and whispering in their ear, “She’s a one.” Before running up to the next unsuspecting observer, smirking, and whispering “She’s a three.” Then the next, “She is good. Definitely a two.”

And even as I write this, I can’t help but imagine a gaggle of Donald Rumsfeld’s “unknown-unknowns” mischievously running between staff, tapping them on the shoulders and whispering in their ear, “She’s a one.” Before running up to the next unsuspecting observer, smirking, and whispering “She’s a three.” Then the next, “She is good. Definitely a two.”

We polled the grades and emphasised that at this juncture it was unprofessional and inaccurate to assign a grade. A professional conversation should follow to either consolidate your judgement or open lines of enquiry. We then watched the excellent feedback session and HMI evaluation. We then polled the grades a second time, and rightly so, a handful of single grade changes. Up, and down. The discussion that followed should have signalled the complexities of graded lesson observations and rightly so. I would have been hugely disappointed if teaching didn’t ignite debate. Yet, instead of questioning the process, I used the three grade variance of our judgements as justification for further standardisation and the second lesson. Regardless, we moved to the proposed distributed model and the new LOPDS form for the final observation of the Performance Review with little commotion. Even if grade validity and reliability was flawed, at least more teachers would be observing lessons, possibly one another, than before and the feedback video was a coaching master class.

[qr_code_display]